GPT-5 Codex: OpenAI’s New Specialty Model for Developers

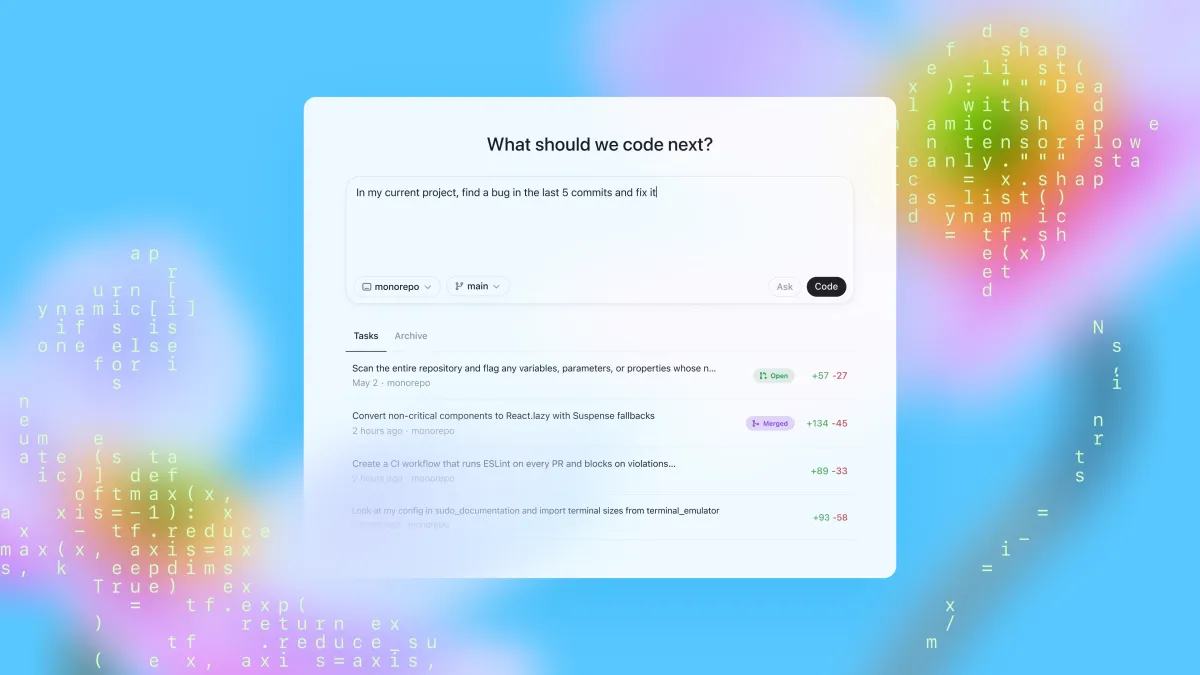

OpenAI has introduced GPT-5 Codex, a new specialty model tuned for programming. It promises sharper performance on both precise code edits and extended workflows, a shift that could redraw the competitive map between Codex, Claude Code, Copilot, and fast-rising challengers like Cursor.

On September 15 OpenAI upgraded **Codex**, introducing **GPT-5-Codex**, a variant tuned for software work. The model now adapts its “thinking time” to task complexity, aiming to be frugal on precise modifications yet persistent on extended workflows. OpenAI frames this as a step toward dependable agentic coding. [OpenAI Launch Post](https://openai.com)

Product: Efficiency as the Feature

The release tackles two routine failings of coding assistants: over-eager rewrites when a narrow change is requested and poor reliability on multi-step projects. GPT-5-Codex is pitched to do the opposite

- **restrained** for targeted adjustments.

- **durable** for complex sequences.

By improving the model’s task understanding and dynamic allocation of compute. OpenAI’s system-card addendum positions it as reinforcement-learned on real pull requests and evaluation loops. [System Card](https://openai.com)

OpenAI also signals a broader view: Codex pairing with developers interactively, then continuing independently on long tasks, with session persistence across local and cloud contexts.

Strategy: The Coding Niche Is Sticky

For much of the past year Anthropic’s Claude Code held the reputation edge in programming, while OpenAI dominated the mass consumer market. The balance shows signs of shifting. Coverage paints GPT-5-Codex as a credible challenge on “agentic” benchmarks; executives talk up fleets of cloud-hosted agents operating under human supervision. If developers move their daily coding to Codex, OpenAI gains not just usage but a stream of high-value reinforcement data. [Business Insider](https://www.businessinsider.com)

Anthropic, meanwhile, has pushed a different front: file creation and editing—native Excel models, PowerPoint decks and PDFs—focusing on enterprise productivity rather than shipping a headline “next Claude Code” this month. [TechCrunch](https://techcrunch.com)

Competitive Context: A Crowded, Fast-Growing Field

- Cursor (Anysphere) has become the momentum story in IDE-centric “vibe coding,” surpassing an estimated $500m ARR and raising at a $9–9.9bn valuation. Growth suggests durable demand for assistants embedded directly in the editor. Cursor is now layering reliability tools (e.g., Bugbot) atop code generation. [The Information](https://www.theinformation.com) [TechCrunch](https://techcrunch.com) [Business Insider](https://www.businessinsider.com)

- GitHub Copilot continues to ship enterprise features (agent mode, repo-aware instructions files) and frequent IDE updates. Microsoft’s distribution remains a moat even as model choice evolves. [GitHub Blog](https://github.blog)

- JetBrains AI Assistant advances on multi-language completion, offline/local options and richer context, catnip for regulated environments. [JetBrains Blog](https://blog.jetbrains.com)

Taken together, the category is maturing from “faster typing” to workflow ownership (planning → coding → review → test → docs), with vendors competing on correctness, observability and enterprise controls rather than raw code volume.

What Changes if Codex Sticks

If GPT-5-Codex truly reduces rework on both ends, fine-grained changes and complex sequences, developers will defect for the simple reason that quality compounds. Tool choice is path-dependent: once teams wire a model into CI, PR templates and policy gates, switching back is rare. That lock-in is reinforced by data: the platform that sees more real diff-level feedback can learn faster.

OpenAI’s messaging on long-lived agents suggests a complementary move: keep humans in the loop for goals and review, let agents grind through chores for hours if needed. Competitors will answer: rumours swirl around Anthropic’s next Opus iteration—but today’s launch gives OpenAI a firmer hand in the coding niche.

What to Watch (and Measure)

- PR quality deltas: acceptance rate, revert rate, defect density in AI-touched diffs versus human-only baselines.

- Time-to-merge on targeted adjustments; cycle time on complex sequences.

- Agent run-time economics: if “seconds to seven hours” is routine, who pays, how cached, how observable?

- Ecosystem pull: IDE integrations, repo-policy hooks, test frameworks, and secrets management—areas where Copilot and JetBrains are entrenched.

- Feature parity vs. differentiation: Anthropic’s file-creation push may win operations teams even if engineers favour Codex; watch org-level standardisation.

The Line for Leaders

- Treat model choice as a procurement decision with telemetry: run bake-offs on your code, not benchmarks.

- Optimise for rework avoided, not tokens consumed.

- Standardise guardrails (review gates, test coverage, secrets) before scaling agents with multi-hour runs.

- Expect a multi-model estate; reserve swaps by keeping adapters between repos and providers.

The novelty here is not flash but durability. If Codex reliably respects narrow asks while carrying complex work to completion, that is enough to move share. In a market where positions tend to persist, that matters.

References

- [OpenAI Launch Post](https://openai.com)

- [OpenAI System Card](https://openai.com)

- [TechCrunch coverage of Codex and Anthropic connectors](https://techcrunch.com)

- [Business Insider on OpenAI’s agent ambitions](https://www.businessinsider.com)

- [The Information on Cursor valuation](https://www.theinformation.com)

- [GitHub Blog on Copilot updates](https://github.blog)

- [JetBrains Blog on AI Assistant](https://blog.jetbrains.com)