Friday, August 29 • AI Product Intelligence

AI Product Intel (Aug 29): OpenAI’s gpt-realtime + Realtime API unlock natural voice + multimodal AI. Google drops HEAL (health equity), GraphQA (reasoning with graphs), ScreenAI (UI understanding), and MELON (3D from images). 🚀

Rise and grind, AI product builders! ☕

Friday, August 29 • AI Product Intelligence

Today's Summary: The latest AI news covers a range of research and product developments, including advancements in computer-aided diagnosis for lung cancer screening, frameworks for assessing health equity in machine learning models, and techniques for encoding graph data for large language models. These innovations have practical implications for product teams working on AI-powered healthcare, decision support, and natural language processing applications. While the research shows steady progress, product managers should focus on carefully evaluating the real-world feasibility and potential impact of these technologies within their specific product context.

Coverage: 168 stories analyzed • 38 high-priority items

🎣 THE HOOK

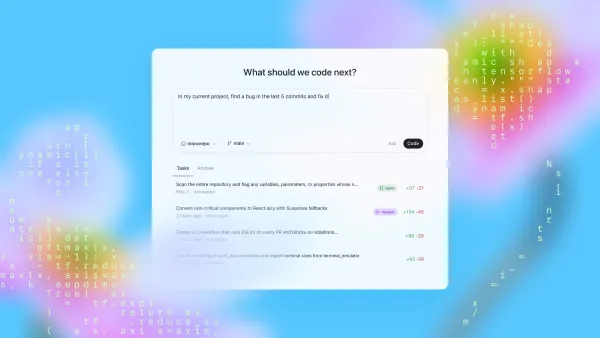

Worth your attention: Introducing gpt-realtime and Realtime API updates

OpenAI's latest advancements in speech-to-speech modeling and API capabilities open up new opportunities for AI product teams. The gpt-realtime model enables more natural, human-like voice interactions, while the Realtime API's expanded functionality - including image input and SIP phone calling support - allows developers to integrate advanced conversational AI into a wider range of applications and use cases. These updates empower product teams to create more

immersive, multi-modal experiences that better meet the evolving needs of end-users.

Source: OpenAI Blog (read more)

⚡ HIGH VOLTAGE

1. HEAL: A framework for health equity assessment of machine learning performance

TLDR: Healthcare AI that could pass regulatory hurdles

Researchers at Google have developed a framework called HEAL (Health Equity Assessment of machine Learning performance) to help ensure that machine learning (ML) models used in healthcare do not exacerbate existing health disparities. The article highlights how skin cancer outcomes are worse for minority populations, those with lower socioeconomic status, and those with limited healthcare access.

The HEAL framework provides a way to quantitatively assess whether an ML-based healthcare tool performs equitably across different patient groups, prioritizing performance for those with the worst health outcomes.

This is crucial for AI product teams developing healthcare technologies, as they need to ensure their models don't inadvertently worsen health inequities. For example, your team could use the

📍 Google Research Blog • Score: 0.83 🔥 • deep dive

2. Talk like a graph: Encoding graphs for large language models

TLDR: Graph neural networks breakthrough with enterprise applications

Researchers at Google have developed a new technique to help large language models (LLMs) better understand and reason about graphs. Graphs are a powerful way to represent complex relationships, but LLMs typically struggle with this type of structured data.

The researchers designed a "GraphQA" benchmark to test different approaches for translating graphs into a format that LLMs can understand. They found that LLM performance on graph-related tasks depends on factors like the complexity of the graph structure and the specific reasoning required. By addressing these challenges, the researchers have opened up new possibilities for applying LLMs to a wider range of real-world problems that involve analyzing interconnected

data.

📍 Google Research Blog • Score: 0.83 🔥 • deep dive

3. ScreenAI: A visual language model for UI and visually-situated language understanding.

TLDR: LLM research that might influence your model selection

Google researchers have developed ScreenAI, a powerful visual language model that can understand and interact with user interfaces (UIs) and infographics, such as charts and diagrams. ScreenAI is based on the PaLI architecture, with added flexible patching strategies from pix2struct, allowing it to handle the complexity and varied formats of these visual

elements.

The key innovation is a novel "Screen Annotation" task that teaches ScreenAI to identify critical information about UI elements, including their type, location, and description. This enables large language models to automatically generate datasets for tasks like question-answering, UI navigation, and summarization. At just 5 billion parameters, ScreenA

📍 Google Research Blog • Score: 0.82 🔥 • deep dive

4. Using AI to expand global access to reliable flood forecasts

TLDR: AI for social good - scalable disaster prediction technology

Researchers at Google have developed a powerful AI-based system that can significantly improve global flood forecasting, especially in regions where data is scarce. The system, described in the paper "Global prediction of extreme floods in ungauged watersheds" published in Nature, leverages machine learning to extend the reliability of current global flood forecasts from zero to five days, and deliver forecasts across Africa and Asia that are on par with what is available in Europe.

This breakthrough is critical, as floods are the most common natural disaster, causing $50 billion in annual damages worldwide and impacting 1.5 billion people globally. By enabling more accurate and timely flood forecasts, this AI system can help save thousands of lives per

📍 Google Research Blog • Score: 0.81 🔥 • deep dive

5. MELON: Reconstructing 3D objects from images with unknown poses

TLDR: Research with potential product applications within 12-18 months

Researchers at Google have developed a new algorithm called MELON that can reconstruct 3D objects from a few 2D images, even when the camera positions are unknown. This is a long-standing challenge in computer vision, with applications ranging from e-commerce to autonomous vehicles.

The key innovation is how MELON addresses the "chicken and egg" problem of needing to know the camera poses to reconstruct the 3D object, but needing the 3D object to infer the camera poses. MELON overcomes this by using self-similarity maps to identify the pseudo-symmetries in the object, which helps guide the 3D reconstruction process.

📍 Google Research Blog • Score: 0.80 🔥 • deep dive

Generated with 🧠 by AI Content Discovery • 2025-08-29 10:21 UTC

Sources: Research papers, industry blogs, technical communities, and the occasional Twitter rabbit hole